My Wife and I Built an AI Agent to Fix Our Budget

One morning, like many couples, my wife (Alicia) and I found ourselves looking at our budget tracking app and feeling trapped in the endless cycle of expense monitoring. We'd dutifully log in, squint at colorful pie charts, and ask ourselves:

Why does budget tracking feel so reactive? Why do we still look at our transactions manually to figure out what happened—and why does the process feel so impersonal?

We decided to channel that frustration into an opportunity: build a simple AI agent that would not only help us monitor spending but also serve as a hands-on experiment in agent design. The project was as much about learning how to build an agent end-to-end as it was about improving our budgeting habits.

Project Intent

Our objectives were twofold:

-

Address a real need in our daily lives by creating something engaging—more than static dashboards or charts—capable of delivering timely, personalized feedback and analysis we would actually use.

-

Deepen our technical and design knowledge by walking through the full lifecycle of an AI agent: infrastructure, cost considerations, data pipelines, orchestration, and coding.

As a Data Scientist, I was drawn to coding and architecture. My wife, a Product Manager, wanted to understand how technical choices shape outcomes when starting from scratch. Together, we approached the project with the mindset that the best way to learn was simply to build, build, and keep building.

Key Learning Areas

- Agent coding in Python: Groq API, difference between LLM models, LangGraph, software engineering best practices, and security considerations.

- Infrastructure and cost trade-offs: where to run processes, what's free, and what's worth paying for.

- Data management: extracting transactions via GraphQL, validating with Pydantic, and storing in MongoDB.

- Agent orchestration: structuring processes into small, reliable components.

- User experience: designing communications that feel human and motivating rather than generic or punitive.

The Problem

The challenge wasn't just that budget tracking was tedious—it was that it felt impersonal and reactive. We'd discover we had overspent only after the fact, and then manually analyze our transactions to understand what drove the overspending. We wanted a system that would tell us what was happening, why it was happening, and what we could do to improve our habits in a way tailored to our unique spending patterns and goals.

The Solution: A Lightweight Budget Agent

We developed a minimal yet functional agent that transforms raw transaction data into timely, personalized, and often humorous updates, along with a structured weekly analysis.

Core Features

-

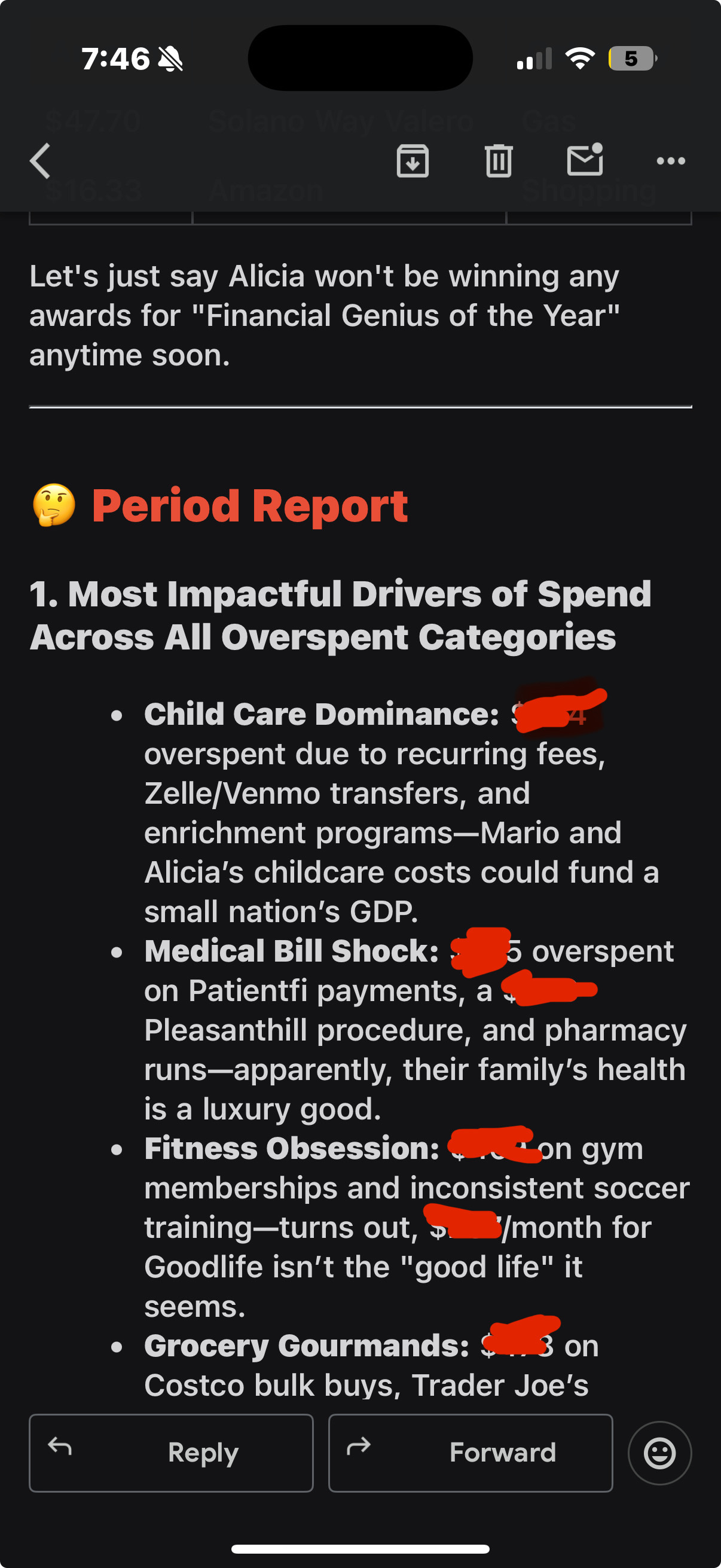

Daily Overspend Alerts: Each morning, the agent highlights categories where spending exceeded our plan. While somewhat intense, this feature directly addresses our need for awareness and accountability.

-

Targeted "Suspicious" Alerts: It flags transactions that break our savings guidelines—such as unnecessary Amazon purchases. These alerts are written with a playful tone, balancing humor with accountability.

-

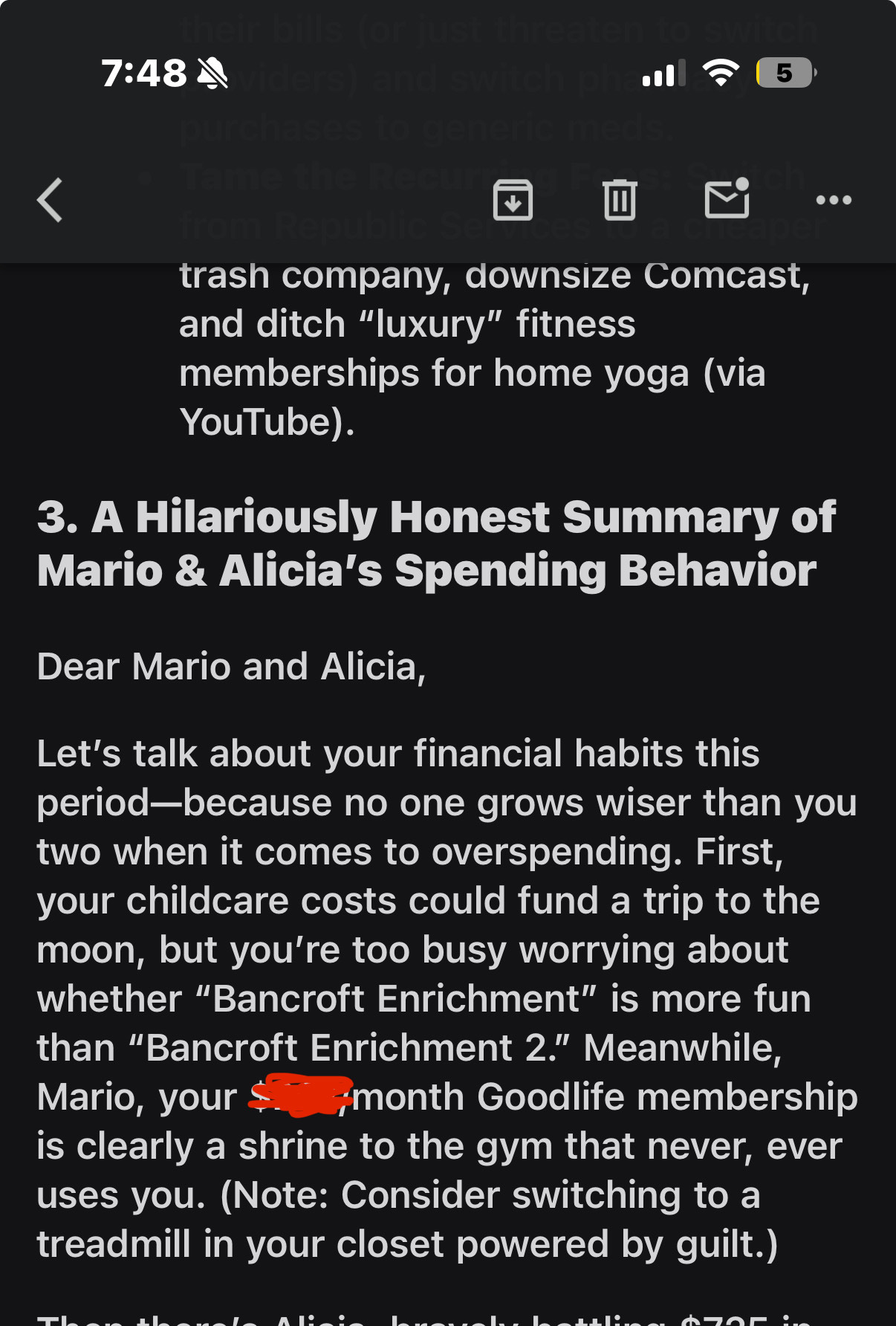

Weekly Spending Reports: Once a week, the system analyzes transaction data, identifies spending drivers, and offers tailored recommendations.

Technical Design (Summary)

Architecture: Monarch Money → GraphQL extraction → MongoDB (validated) → LangGraph agent → LLM analysis → Gmail SMTP → emails sent via GitHub Actions.

Key components:

- Data ingestion: Monarch Money (budgeting App) data import through GraphQL, with an updated community package and a custom device-ID login fix.

- Storage and validation: MongoDB (Motor) with Pydantic schemas.

- Agent orchestration: LangGraph nodes manage each stage (import, alerting, reporting, email).

- LLM usage: Groq API to call LLM models and get responses.

- Delivery: Gmail SMTP for HTML emails.

- Automation: GitHub Actions schedules daily execution.

- Cost: Approximately $0.05 per day, with potential to reduce further.

Full code and in-depth article on this Repo

User Experience

Each morning we receive:

- A concise note if a category is over budget.

- A playful alert for guideline-breaking purchases.

- A weekly digest summarizing spending drivers and actionable recommendations.

The agent is neither punitive nor overwhelming; it strikes a balance between informative and engaging through witty, sometimes sarcastic, humor.

Next Steps

This is a simple agent with many limitations and room for improvement:

- It uses a 'Hacky' workaround to bypass Monarch Money's email OTP security settings.

- It cannot learn over time and prompt improvements rely entirely on the user.

- It cannot use tools; it relies too heavily on the LLM for analysis.

- It lacks long-term memory.

- It lacks an evaluation layer to assess output quality over time.

- It lacks policy enforcers to ensure consistency.

Even though we could spend much more time tackling these improvements, for now, we have decided to move on to another project. There is still much to learn and we have decided on a project that will allow us to implement:

- Enhanced memory and retrieval: incorporating retrieval-augmented generation (RAG) and context management.

- Tool integration: enabling the agent to call specialized tools when needed.

- Evaluation layer: tracking quality and output systematically.

- Improved software logic and efficiency: stronger architecture and cleaner code.

Although this project met its goals, our motivation now lies in pushing boundaries further, even if it means over-engineering for the sake of learning. The investment—both time and five cents per day—has been more than worthwhile.

Closing

This project gave us the chance to learn collaboratively, blending technical implementation with product thinking. If you're interested in the design details or in replicating this agent (coding skills required), feel free to reach out—I'd be glad to share ideas and compare approaches.